There are two main types of autofocus—phase detection and contrast detection. Generally speaking, phase detection is used in DSLRs whilst contrast detection is employed in compact and mirrorless cameras.

Technically speaking we’re talking about passive autofocus—the passive simply referring to the fact that the camera only uses the information (light) that enters through the lens to correctly focus. It doesn’t, for instance, send out sonar beams or light rays to judge the distance of the subject as might happen in an active system.

Phase Detection

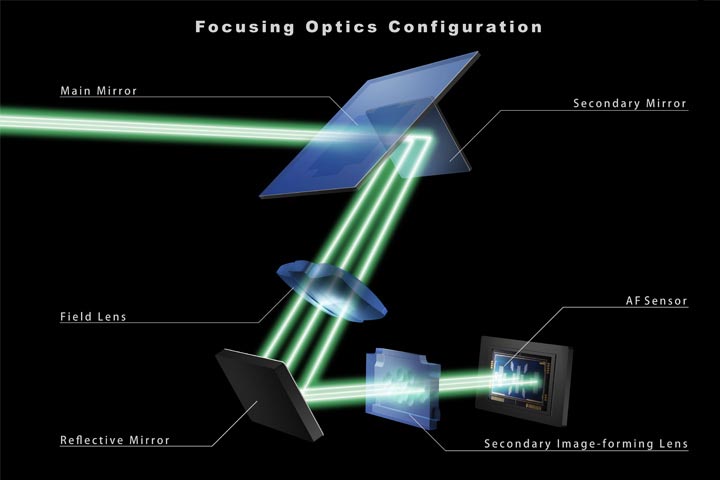

Phase detection works by splitting the light coming through the lens, the semi-transparent pellicle mirror sending light to both the viewfinder and a dedicated autofocus (AF) unit located at the bottom of the camera. Light rays from either side of the lens are then focused onto (at least) two micro-sensors within the AF unit.

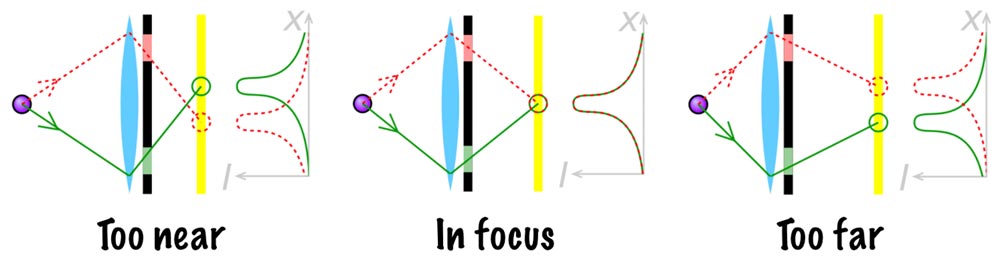

These micro-sensors are specialised strips that measure light intensity patterns. The pattern from each micro-sensor is analysed and the lens focus is adjusted until the patterns from both micro-sensors match—at which point the image should be in focus. See here for an example of this process in motion (play with the slider).

From the way the light falls on the sensors the camera knows whether it is front- or back-focused and so can adjust the lens accordingly (this is one of the primary advantages of phase detection autofocus since it means the time the lens spends “hunting” can be significantly reduced).

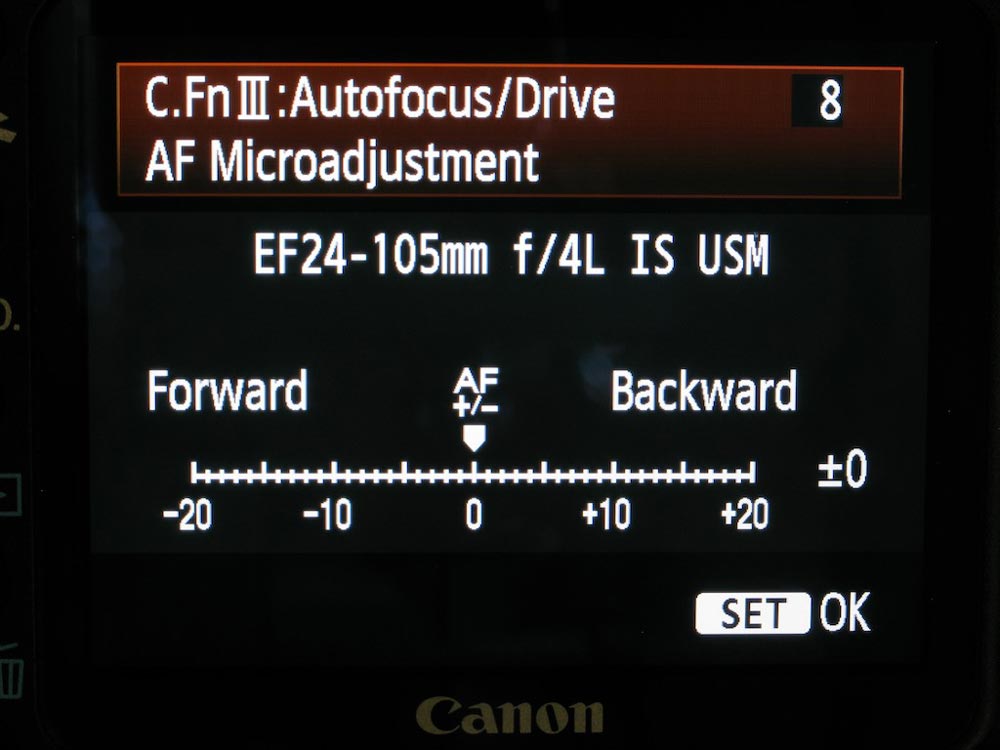

Of course, this all relies on perfect alignment of the sensors and mirrors during the manufacturing process as even a slight deviation on the spacing of the two AF sensors can lead to front- or back-focusing issues with the camera. This is especially challenging because it relies on perfect calibration of the lens as well—miniscule misalignments in camera and lens can double up to result in a final margin error outside an acceptable range. This is why higher end DSLRs offer in camera AF adjustments (programmable for each lens) to let the user compensate for recurring front- and back-focusing errors.

Some cameras now offer on-sensor phase detection focusing—the presence of the dedicated AF sensors on the main camera sensor plate being hidden amongst the pixel sensors (a few hundred vs 20 million pixels); however, performance at the moment by no means matches that offered by DSLRs with dedicated units.

Contrast Detection

Unlike phase detection, contrast detection does not require a dedicated AF unit (in any case, the lack of a pellicle mirror means that light cannot be split and redirected as in the case of phase detection). Analysis is performed via the image sensor and best focus is calculated based on the theory that the point of focus will coincide with the highest point of acutance (i.e. local contrast, the difference in light intensity between neighbouring pixels). It is the main method used in compact and mirrorless cameras and is generally speaking more accurate than phase-detection. Again, take a look at this example on the Stanford page which lets you play with the slider to get an idea of how contrast detection works.

Contrast detection is slower than phase detection—not least because the camera cannot tell whether it is front- or back-focused based on acutance alone and so the hunting process takes longer. This is especially an issue when you have a constantly moving subject. For example, if the subject moves forward (towards the camera) after focused is achieved a phase detection system would know that the it is now back-focused and micro-adjust accordingly (as happens with tracking); however, a contrast detection system would simply know that peak acutance has been lost and start hunting again. This makes contrast detection a less desirable method for DSLRs.

With development of on-sensor phase detection, there is also the option to use hybrid of both methods to achieve fast and accurate focus—phase detection used to achieve approximate focus (because it can zone in on the focal point faster) and contrast detection to fine-tune the focus.

Phase Detection Sensors

Now that we’ve outlined the two primarily focus methods, let’s take a closer look at phase detection autofocus sensors themselves. As mentioned above, this is the main type of focusing method used in DSLRs. I say “main” because most cameras nowadays come with a “Live View”. This shooting mode projects the sensor image onto the rear LCD screen but in order to do this it must flip the pellicle mirror into its upward position to let light pass. With the pellicle mirror now out of use it can no longer direct light to the dedicated AF unit and so the camera has to employ other methods (typically contrast detection) to achieve focus.

Just like image sensors the performance of these phase detection devices varies widely and is dependent upon on a host of factors (including the lens which is affixed to the camera body).

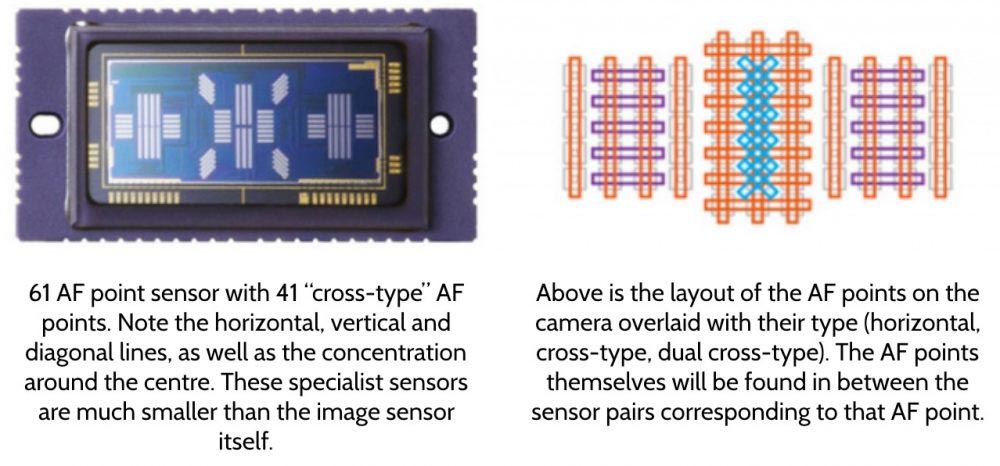

First there’s the most oft quoted statistic: the number of AF points. More is better. The Canon 5D Mark III has 61 AF points, the 7D Mark II 65 AF points, and an entry model like the Rebel T5 (EOS 1200D) 9-points. The EOS 650 introduced in 1987 had one. But it’s no good having 65 points if they’re all bunched in the centre of the viewfinder—so coverage is also important (the trade-off here being that you don’t want to leave too many blind spots where the point of focus might actually lie in-between the points). Even on higher end DSLRs the autofocus points typically do not cover more than 50% of the viewfinder area. This is for both technical (discussed soon) and practical reasons — you’re unlikely to want to have the very corner of your viewfinder as the focal point and even if you did focus lock would give you the option to focus and then recompose the scene.

Say, for instance, that you’re trying to photograph a tree trunk with just two vertical sensors in the camera’s AF unit. These sensors are looking for light intensity patterns but because the trunk of the tree will cover both sensors entirely, these patterns are going to be limited to those produced by the texture of the bark, for instance. If, however, you tried to focus using two horizontal sensors then the light intensity pattern would be more pronounced as the tree, its edge and the subsequent background would be covered by the sensor making it easier to align light intensity patterns across sensors and achieve focus (far more pronounced changes).

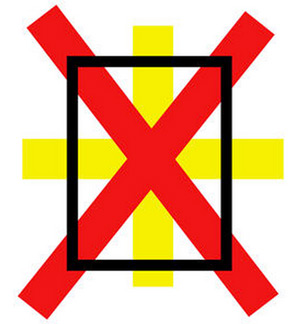

“Cross type” sensors are just a combination of horizontal and vertical sensors which between them stand a much better chance of focusing regardless of the orientation of the subject. The addition of a diagonal aspect takes this one step further to ensure that the camera will achieve accurate focus no matter what the subject (well, almost!). With these special diagonal cross-type or dual cross AF points the combination of vertical, horizontal, and diagonal means that there are now eight individual sensors for one dual cross AF point. With this in mind, take a look at the 5D Mark III’s sensor along side a diagram indicating the type of each AF points in the viewfinder and you should begin to see how the two match.

Next we have the distance the pairs of sensors are from each other—the further apart they are the more slight deviations will be amplified allowing the camera to fine-tune focus to a more precise level. This sounds good, but there is a big catch—lenses with a low maximum aperture (e.g. ƒ/5.6) don’t allow a big enough light circle to fall on the AF strips when they are placed at the edges of the sensor.

Remember that before you press the shutter the lens is always open at its maximum aperture, regardless of the setting you have on your LCD display. This is why the viewfinder doesn’t doesn’t turn dark when you roll the dial to ƒ/16 nor the depth of field increase through the viewfinder when you focus at that aperture. Try it for yourself: put your camera settings to ƒ/16 and then press the ‘depth of field’ button (if your camera has one) and watch the viewfinder turn dark!

Take another look at the Canon 5D Mark III’s diagram with an explanation of the AF points and their required aperture.

At ƒ/2.8 and greater the 5 centre AF points are diagonal cross-types (or “dual cross” AF points); up to ƒ/5.6 they are cross-type; and beyond ƒ/5.6 they are redundant (you would need to be using a low quality lens or a very large focal length coupled with an extender before this was a realistic concern). The below diagram shows how the AF points change if you affix an ƒ/5.6 lens to the camera.

Finally we have speed (processor driven), precision (reliability of achieving correct focus over several shots of the same scene), and sensitivity. The latter determines the AF systems performance in low-light and is given as an EV range. You’ve probably encountered the lens hunting back and forth for a focal point in low-light many times before so the lower the bottom point of the EV range the less likely this is to happen.